People seem to think that LLMs are like a magic wish-granting genie with unlimited wishes.

They tend to ignore the "but what if the genie is hallucinating and can't tell truth from falsehood?" side of the bargain.

https://thepit.social/@peter/114601765045842916

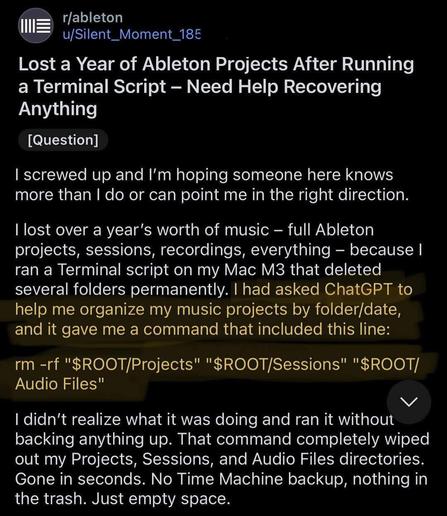

The PitPeter (@peter@thepit.social)Attached: 1 image

gonna be a lot more of this going around. hell of a way to learn bash.

Kathmandu @Kathmandu@stranger.social

People have been thinking that since the earliest days of home computers. There are whole novels written where you can tell the author had heard of computers but had never actually used one. Once the author actually got some computer experience, their fictional computers stopped being digital genies.

So it's a cognitive hole that humans are going to fall into with every new technology that even vaguely seems like it can think.